In case you didn’t know, FiveThirtyEight is an awesome blog about statistics. Recently, they posted a challenge against the new Words With Friends Artificial Intelligence. For the sake of science, I decided to replicate their study.

I’m an avid WWF player. As of this writing, I have played exactly 1800 games since October 2010 (which amounts to a little over one game per day). Of those, I’ve won 930, lost 864, and tied 6. Yes, I win more than I lose, but not this isn’t statistically significant (χ² = 2.4281, df = 1, p-value = 0.1192). In other words, I win more than I lose mostly due to chance.

I’m also not as great of a player as Oliver Roeder, the blogger over at 538. He’s only played 455 games of WWF, but his average score is much higher than mine. He also ranked somewhere in national Scrabble tournaments. I entered once, and got promptly pummeled during the first few rounds. As it turns out, one needs to average one bingo (play all 7 tiles) per game to even begin to play competitive Scrabble. According to the WWF app, I average one bingo every 5 or 6 games, so I still have a long way to go.

Oliver bested the AI easily, but I wanted to try out the WWF Solo Play AI myself. Was the AI that bad? Or would a more “average” player like me be challenged by it? I followed the same rules FiveThirtyEight did: no cheating, and play a total of six games. For science. (And yes, I did screenshot the first 5 games all at once, hence the timestamp).

Round 1: Humans 1, Computers 0

I passed my turn. The computer opened with “SAVER”, which could have been repositioned to reach a double word tile, but it failed to do so. Okay, so maybe the AI isn’t that great…

Round 2: Humans 2, Computers 0

I’d like to point out that “ORE” down at the bottom was played by the computer. The AI seems to get the strategy of racking up points by stacking two-letter words sideways.

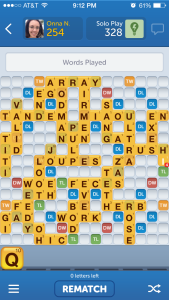

Round 3: Humans 2, Computers 1

Ouch. I was left with a Q and nowhere to play it. Even so, a paltry 254 points is a pretty bad score. I have no excuses.

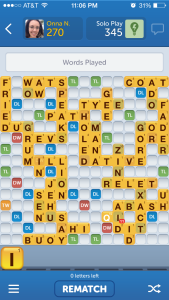

Round 4: Humans 3, Computers 1

At this point, the AI seems to be matching my abilities a little better. A score in the low 300’s is more in-line with my typical game.

Round 5: Humans 4, Computers 1

Round 6: Humans 4, Computers 2

All in all, I averaged 312 points while the AI averaged 304. This difference isn’t statistically significant either (χ² = 30, df = 25, p-value = 0.2243). So, the computer did more or less seem to match me in ability.

Although many players would be discouraged from constantly being beaten by the computer, I think if the goal is to “challenge” oneself to improve, the AI should place itself at the user’s level, or slightly higher. It appears the AI places itself below the user’s level, but not statistically significantly so.

When playing against Oliver from FiveThirtyEight, the AI averaged 341, while he averaged a whopping 451. In Oliver’s case, it may be that the AI just doesn’t have the strategy to compete with nationally-ranked players, and ends up way below his level.

My verdict? For superstar players, the WWF Solo Play AI might not be a challenge. For the rest of us, it seems to adapt to our abilities quite well, and even boost our egos by letting us win most of the time.

You must be logged in to leave a reply.